Let’s talk about deepfakes. They’re no longer just a sci-fi concept; they’re a real threat, targeting companies like yours in phishing attacks.

Not long ago, it might have seemed unlikely that deepfakes would be a significant risk you need to prepare for, but that’s changed. According to a recent study, deepfake fraud attempts rose by 3,000% in 2023.

Have you heard about the LastPass deepfake attack? In this blog, we’ll break down what happened in the LastPass attack, how deepfakes are being used in attacks, and how you can prepare your team to defend against them.

What is deepfake phishing?

Deepfake phishing is a new type of cyber-attack where hackers use AI to create convincing fake images, videos, or audio that look and sound real.

Deepfake phishing is similar to standard phishing. The goal is simple: to deceive you into revealing sensitive information or engaging in fraudulent activities. These deepfakes can change backgrounds, alter appearances, replicate voices, or superimpose faces, making them highly believable.

You might be more familiar with the fake videos of public figures saying or doing things they never did. But it’s not just happening in politics; it’s being used for highly believable scams.

How is deepfake phishing be used?

Let’s dive deeper into the world of deepfakes and explore how they’re shaking things up in Cyber Security.

Picture this: in Hong Kong, a finance worker at a multinational company was duped into transferring $25 million.

- How? Fraudsters used deepfake technology during a video call to impersonate the company’s CFO and other key executives.

You might be wondering, “Were there any telltale signs that it was a fake?” In the past, AI-generated fakes were a bit rough around the edges, easily spotted by their mismatched lip movements and uneven facial features. But times have changed dramatically. The technology has advanced so much that it now produces real-time, highly realistic fakes that are incredibly challenging to detect.

- Voice phishing: Now, let's talk about deepfake voice phishing—it's startlingly easy these days. With just a brief audio clip, scammers can recreate someone’s voice with chilling accuracy.

This tech is fuelling new kinds of scams, such as fake distress calls from what sounds like a family member or a colleague, urging you to send money urgently.

There are even more sinister uses, like simulating a child’s voice in a fake kidnapping scam. In the corporate world, these voice fakes might mimic a CFO or another executive to green-light unauthorised financial transfers.

The LastPass deepfake attack- what happened?

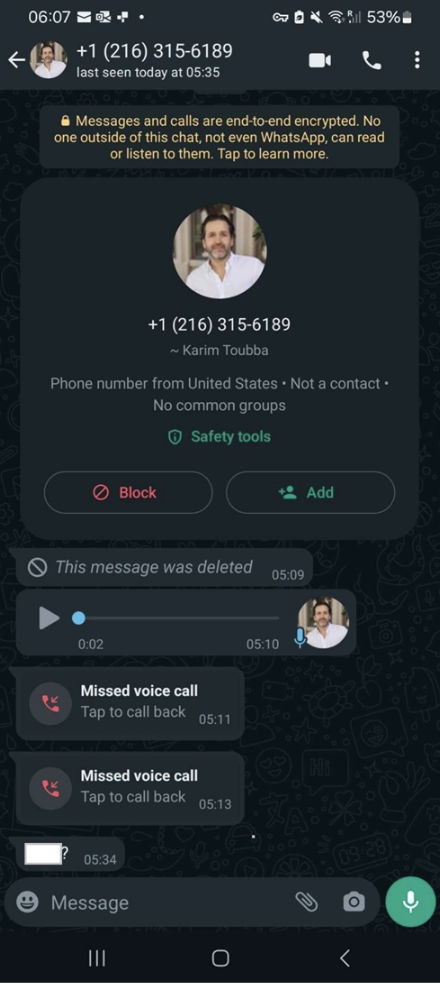

LastPass, a well-known name in password management, recently dodged a cyber bullet thanks to the alertness of one of their employees.

Here’s the scoop: The employee started getting calls, texts, and even a voicemail on WhatsApp that seemed off. The messages had used a convincing deepfake audio impersonating LastPass’s CEO. That’s right, someone had cooked up a fake version of the boss’s voice to try and trick the employee into taking some risky action.

Luckily, this employee wasn’t fooled.

- What raised suspicion? The strange choice of communication channel and the pushy tone raised a red flag.

- What happened next? Instead of responding, they reported everything to LastPass's internal security team. This quick thinking not only prevented a potential disaster but also helped LastPass step up their game against similar targeted attacks.

- Sharing their story: They’ve gone public with this story to teach a valuable lesson: deepfake video technology isn’t just for creating viral videos—it’s also a growing tool for cyber-criminals.

Mike Kosak, a top analyst at LastPass, pointed out in a blog that while we often hear about deepfakes in the context of politics, the private sector needs to watch out too. The tech is out there, and it’s getting better and more accessible. This incident is a perfect call to action for companies everywhere to educate their teams and tighten up security protocols.

How to protect against deepfaked phishing attacks?

Now that we’ve discussed the risk of deepfake phishing, let’s dive into how you can defend your business against it. Just like with all Cyber Security challenges, there’s no one-size-fits-all solution, so be wary of any provider claiming otherwise. Instead, it’s about implementing layered measures to minimise deepfake risk at every opportunity.

- Security Awareness Training

Provide your team with the knowledge and awareness they need to tackle deepfake phishing head-on. Your team might be familiar with standard phishing tactics, such as being cautious about clicking on links and verifying emails, but deepfakes elevate the threat to a new level.

Imagine they received a Teams call from a genuine corporate account, and the person on the video looks just like the finance director—it’s going to be much harder to spot. That’s why your training programme needs to evolve with the phishing threats of today.

Spotting deepfakes isn’t always easy, but there are definite signs to watch for. While technology advances make visual clues less obvious, keep an eye out for things like jittering or blurring in videos, and listen for strange audio distortions.

But the real key is making sure your team knows these attacks are happening and understands how they could be targeted based on their roles in the company. When it comes to social engineering tactics, the warning signs are much like those of classic phishing attacks. Stay wary of urgent or unusual requests.

Giving your team this knowledge goes a long way in reducing their risk of falling for scams. But it’s important to remember that even with this awareness, nobody can spot deepfakes with 100% certainty.

- Secure Your Account Access

Step up security on your internal accounts. Deepfake phishing succeeds when attackers slip into real accounts that they’ve compromised. To guard against unauthorised access, we strongly recommend implementing multi-factor authentication (MFA). But keep in mind, not all MFA options are created equal.

Steer clear of solely using biometrics like facial recognition, as it’s vulnerable to deepfakes. A safer bet is to use app-based one-time passwords or physical security keys, which offer robust protection against these kinds of threats.

- Use AI to Fight Back

Use AI and deep learning to counter the rising threat of deepfake phishing. Set up AI-powered detection models to spot and block these attacks. These models are usually better at catching deepfakes than we are, but they’re not perfect. With cyber-criminals always advancing, your defence strategy must outmatch their technical innovations and speed.

- Implement Failsafes

Let’s face it, both people and AI have their limits when detecting threats. It’s essential to put in place extra layers of defence. Adding workflow or technical barriers can prevent one phishing email mistake from putting your whole organisation at risk. Take actions like requiring multiple approvals for big transactions.

When it comes to accessing sensitive data or large amounts of money, always insist on checks from multiple sources or systems such as MFA. These steps might slow things down, but they’re crucial for giving your team time to think and better protect against deepfake attacks. Involving more people and systems strengthens your defence, making your organisation safer.

Looking ahead: Is your team prepared to tackle deepfake attacks?

The real question here is: Are your security measures keeping up? Deepfakes and voice cloning add a new layer of complexity to phishing schemes, and your defences need to be sophisticated enough to match. It’s not just about catching the easy scams anymore; it’s about preparing for the ones that are harder to detect. Are you adapting fast enough to stay ahead of these threats?

If you’re seeking advice on how your company can effectively tackle deepfakes, don’t hesitate to reach out to our experts. You can call us at 0121 663 0055 or send an email to enquiries@equilibrium-security.co.uk. We’re here to help!

Ready to achieve your security goals? We’re at your service.

expertise to help you shape and deliver your security strategy.

About the author